Gut Feelings About AI

Like the rest of you, I’ve been keeping a close eye on the burgeoning AI revolution with hope of understanding it before it understands me. Good luck to us all.

An early development has been the reaction of intellectual property holders to having their content included in the training of Large Language Models (LLMs). Entities like the NY Times have sued to stop their inclusion (or, more accurately, their uncompensated inclusion) in training datasets.

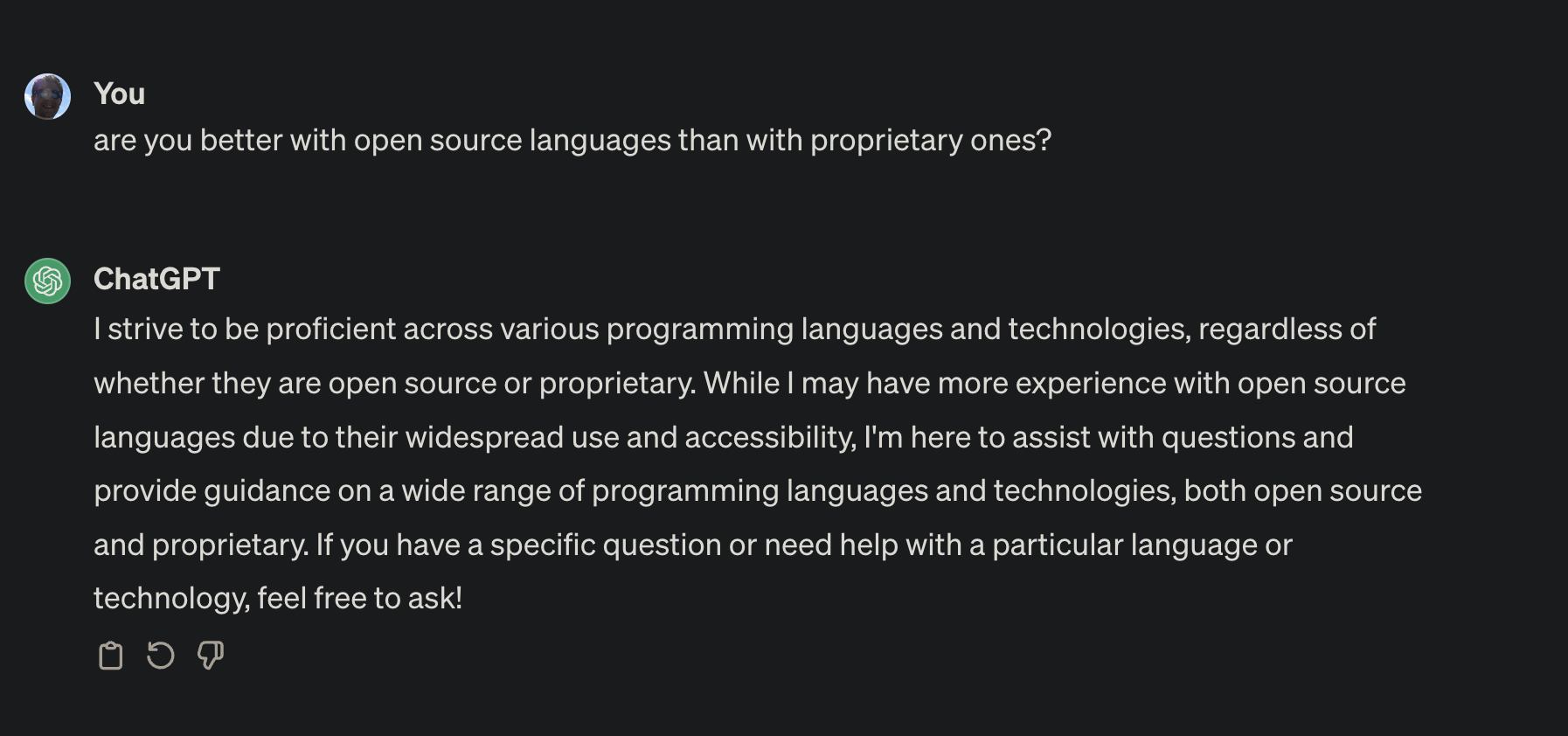

Fair enough. But what does this mean for AI’s understanding of technical knowledge such as programming languages? Wouldn’t open source be more easily available to train on? I wanted to know what chatGPT (3.5, the free one) thought:

It’s clear that open source succeeds in this aspect, making its knowledge public by design, and therefore being incorporated comprehensively in these first LLMs, in these amazing new teachers that will be teaching the next generation of developers.

Anecdotally, this bears out. Developers are getting much better answers when asking about open source and “foundational” technologies than for proprietary software platforms. It wouldn’t be surprising to see a push by private interests to open up their documentation stores to be freely perused by AI scraper bots, so they’re not left out.

Having your data available for AI training will be just as desirable as having your website at the top of search results. It’ll be more desirable, and we’ll even pay for it, as we ended up doing for search results. The equivalent of being ‘atop’ search results will be that your data was lingered on by the machine learning AI, refined and ultimately is presented to humans more cogently and perhaps now with your own biases. That product will sell like hotcakes of glorious disinformation.

Let’s follow your precious data, as though it were food, down the GI/AI tract…

- be ingested by an LLM

- cry “oh no! I’ve been eaten!” and struggle a bit

- break down and join the gut biome